LATEST ANNOUNCEMENTS05/29/2019: GPU class with guest lecturer, Steven Reeves, on Thursday (30th May)! 05/21/2019: Final project is set! See the Homework tab for instructions or go here. You have until finals week to finish this project but get started as soon as you finish your OpenMP homework, as it WILL take some time to formulate, code up and debug!

|

Introduction to High Performance Computing

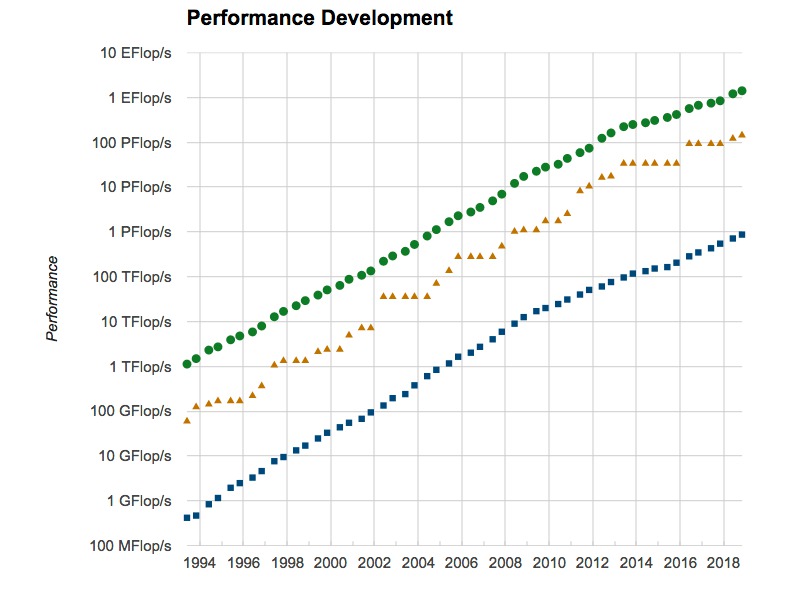

AMS 250 is a graduate course that introduces the student to the modern world of cutting-edge supercomputing. As high performance computing (HPC) rapidly becomes the third pillar of scientific research alongside theory and experimentation/observation, the need for skills in this area becomes apparent. And yet the supercomputing world is extremely daunting, as modern machines are extremely complex: almost all now are parallel, and many, if not most, exhibit heteregenous rather than homogenous architectures. This course is designed to put the novice student at ease, and teach the intermediate student some design paradigms for working with such machines. Working with parallel architectures introduces a whole new complex aspect to algortihmic design and modelling. This course will teach the basic principles and the basic tools necessary for students intent on using such machines for scientific research in the future.

Instructor

Nicholas Brummell

Applied Mathematics and Statistics

9-2122

brummell at soe.ucsc.edu

Office hours: M 5pm

Course times and location:

Physical Sciences 136

Tues/Thurs 1:30-3:05pm

Grading

Course will be evaluated on a series of homeworks and programming assignments (about 5) plus one final project.

Accommodations

If you qualify for classroom accommodations because of a disability, please get an Accommodation Authorization from the Disability Resource Center (DRC) and submit it to me in person outside of class (e.g., office hours) within the first two weeks of the quarter. Contact DRC at 459-2089 (voice), 459-4806 (TTY), or http://drc.ucsc.edu for more information on the requirements and/or process.

ARCHIVED ANNOUNCEMENTS

05/01/2019: Hummingbird is up and running again. New deadline for HW3 = tomorrow's class (05/02). 05/01/2019: There is also a new partition, that has been created for our exclusive use for this class. It contains 2 nodes with 48 and 64 coures for a total of 112 cores. Please access this partition by using "#SBATCH -p Course" 05/01/2019: Clarifications of some MPI things that came up in class: 1. Regarding the "row" vector derived datatype example code (reproduced below): call MPI_Type_vector(ncols,1,nrows,MPI_DOUBLE_PRECISION,& 04/23/2019: Homework 3 on parallel batch job scheduling on grape and hummingbird is set! See the Homework page and Lecture 7. 04/18/2019: Finish the task-channel symmetric pairwise interaction exercise! 04/18/2019: HW2 deadline extended to Monday Apr 22nd 9am 04/10/2019: Don't forget that HW1 is due before class starts on Thursday! 04/09/2019: If you cannot access grape, you will need to apply for a BSOE account. Go to |